Message boards : Theory Application : Windows Version

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · Next

| Author | Message |

|---|---|

Laurence CERN Laurence CERN Send message Joined: 12 Sep 14 Posts: 1152 Credit: 342,328 RAC: 0 |

Error result: https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2753359 Strange. I don't understand this. Nothing has changed. |

|

Send message Joined: 13 Feb 15 Posts: 1259 Credit: 1,022,816 RAC: 169 |

Another job is hanging around. No action in Console for > 20 minutes, but using CPU.  I will end that task. Result: https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2753353 Will be reported in a few minutes. |

|

Send message Joined: 22 Apr 16 Posts: 782 Credit: 4,057,880 RAC: 0 |

This task show no RDP in Boinc: https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2753357 Finished after 1:30 hours with Endstatus 1 (0x00000001) Unknown error code |

|

Send message Joined: 22 Apr 16 Posts: 782 Credit: 4,057,880 RAC: 0 |

7 Theory finished with points, only five are in MCProd listed for today. |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 848 Credit: 15,957,878 RAC: 4,190 |

This task show no RDP in Boinc: 2019-02-21 14:13:32 (6684): Guest Log: 13:13:32 2019-02-21: cranky: [INFO] Running Container 'runc'. 2019-02-21 15:36:43 (6684): Guest Log: 14:36:42 2019-02-21: cranky: [ERROR] Container 'runc' failed. And as you can see *cranky* decided to be cranky 1 hour 23mins later |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 848 Credit: 15,957,878 RAC: 4,190 |

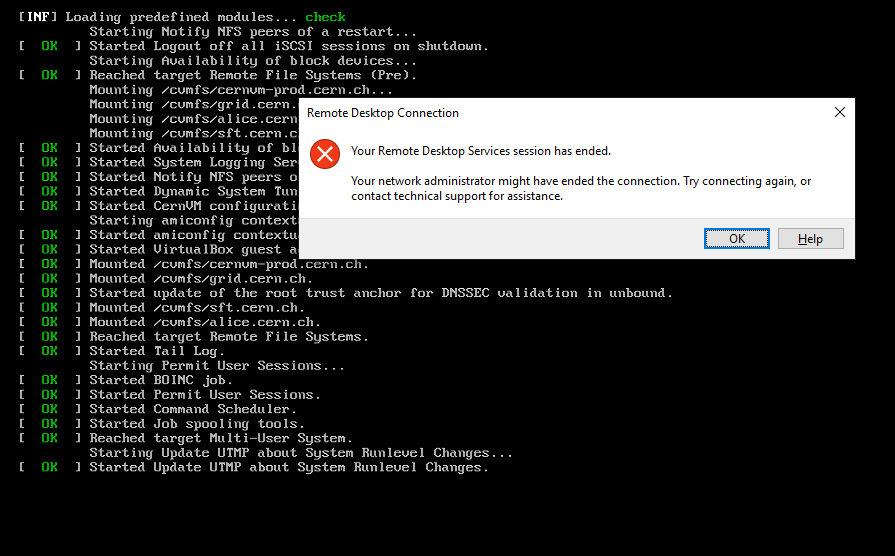

I started my first task and after about 3 minutes while watching the VM Console it stopped and that means the task stopped in the VB  And then a couple minutes later became a error in the Boinc Manager https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2753275 00:00:44.303474 NAT: DHCP offered IP address 10.0.2.15 00:02:51.044715 Console: Machine state changed to 'Stopping' 00:02:51.045041 Console::powerDown(): A request to power off the VM has been issued (mMachineState=Stopping, InUninit=0) 00:02:51.045056 Display::handleDisplayResize: uScreenId=0 pvVRAM=0000000008b20000 w=800 h=600 bpp=32 cbLine=0xC80 flags=0x1 00:02:51.170481 VRDP: Logoff: HAL_5778XX (::1) build 17763. User: [] Domain: [] Reason 0x0001. 00:02:51.170635 VRDP: Connection closed: 1 00:02:51.170724 VBVA: VRDP acceleration has been disabled. 00:02:51.171367 VRDP: TCP server closed. 00:02:51.172944 Changing the VM state from 'RUNNING' to 'POWERING_OFF' |

Laurence CERN Laurence CERN Send message Joined: 12 Sep 14 Posts: 1152 Credit: 342,328 RAC: 0 |

7 Theory finished with points, only five are in MCProd listed for today. The jobs are submitted in batches so are only reported once all have finished hence the delay. I can see if there is something I can do to speed this up. |

Laurence CERN Laurence CERN Send message Joined: 12 Sep 14 Posts: 1152 Credit: 342,328 RAC: 0 |

I have been watching your tasks. I am surprised about the issues as the only main difference with this and the old approach is the jobs go via the front door rather than the back door. I did see that you had at least one valid task. Updating the cvmfs configuration to use cloudflare may help. |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 848 Credit: 15,957,878 RAC: 4,190 |

I have 4 Valids with 2 each on 2 different pc's (the old 3-core that I made to run 4 cores got these since I had -dev turned on from before and it was supposed to only be running LHC Theory) I have 2 new ones that have made it past 20 minutes so I will see how these go. But I got over 10 tasks since those Valids that only lasted about 8 minutes before they became errors. cranky: [ERROR] 'cvmfs_config probe alice.cern.ch' failed. |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 848 Credit: 15,957,878 RAC: 4,190 |

2 more Invalids cranky: [ERROR] Container 'runc' failed. This one seems to be running (3 hours at 15% )  |

|

Send message Joined: 13 Feb 15 Posts: 1259 Credit: 1,022,816 RAC: 169 |

Since the result-upload was fixed in v4.16: 30 Valids, 1 error (runc failed, reported in this thread) and 1 task, I stopped myself. I have my first sherpa running on Windows. That seems to be a long one. I figured out what is the job description using Powershell: PS D:\> Get-Content D:\boinc1\slots\0\shared\input | Select -Index 10 echo "runspec=boinc pp z1j 7000 250 - sherpa 2.2.4 default 100000 20" I'm running now 4 dual core Windows-tasks and 1 single core Linux VM on my Windows host. CPU-usage between 13% and 19% per task (8 threads = 100%) and very rarely an outbreak to 25%. |

|

Send message Joined: 22 Apr 16 Posts: 782 Credit: 4,057,880 RAC: 0 |

There is a new .vdi for Windows. Have this seen ATM for a Theory-download! |

|

Send message Joined: 13 Feb 15 Posts: 1259 Credit: 1,022,816 RAC: 169 |

There is a new .vdi for Windows.I got a new task after your post, but I still have Theory_2019_02_20.vdi in use. No new vdi. |

Laurence CERN Laurence CERN Send message Joined: 12 Sep 14 Posts: 1152 Credit: 342,328 RAC: 0 |

There is a new .vdi for Windows. There was not a new vdi for Windows when you did your post but there is now (Theory_2019_02_22.vdi). It contains the OpenHTC.io proxy configuration so will hopefully help with issues like what Magic has been experiencing. |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 848 Credit: 15,957,878 RAC: 4,190 |

There is a new .vdi for Windows. Thanks Laurence, I am d/ling that right now and since it is after 2am it will d/l fast (about 15 minutes) as fast as 4.5Mbps right now. The only problem is I will only do this on the one host for now since this will probably make my high-speed close to used up for my current month but I will try to d/l on another host starting after 8am and hope that it will finish before 2am the following day. (and I will check my isp stats around noon just so I don't waste that right now) Theory Simulation v4.18 - Theory_2019_02_22.vdi I hope the new vdi does the trick. |

|

Send message Joined: 10 Mar 17 Posts: 40 Credit: 108,345 RAC: 0 |

Considering how the new vbox app works, is it still worth using a local proxy server anymore? If yes, are there plans to implement that? |

|

Send message Joined: 28 Jul 16 Posts: 531 Credit: 400,710 RAC: 0 |

Considering how the new vbox app works, is it still worth using a local proxy server anymore? My guess: The vbox app (windows, mac) may need it much more than the linux native app as the latter keeps it's CVMFS content persistent. |

|

Send message Joined: 13 Feb 15 Posts: 1259 Credit: 1,022,816 RAC: 169 |

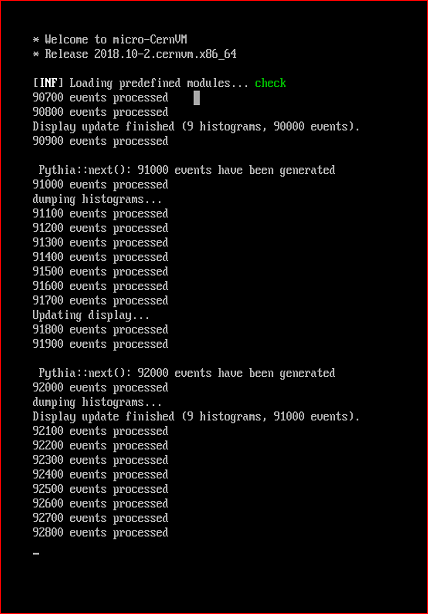

I have 2 tasks running now with no further output to the Console. Similar picture as in https://lhcathomedev.cern.ch/lhcathome-dev/forum_thread.php?id=451&postid=6033 I told in that message that it was using the CPU, but now I'm running dual core VM's it uses 200% CPU of a core. So it's very busy with ...... what? Jobs: boinc pp jets 13000 150,-,2360 - pythia8 8.230 tune-A2 100000 20 and boinc ee zhad 34.8 - - pythia8 8.235 tune-fischer1 100000 20 Maybe nothing to do with the jobs itself, but could it be the Tail Log eating the CPU? lhcathome-dev Theory_2279-752178-20_2 4.18 Theory Simulation (vbox64_mt_mcore) 00:31:50 elapsed (01:05:13 CPU) Running 204.8% CPU lhcathome-dev Theory_2279-766390-20_2 4.18 Theory Simulation (vbox64_mt_mcore) 00:29:58 elapsed (01:01:22 CPU) Running 204.7% CPUEdit: Theory_2279-766390-20_2 was validated OK, but as you can see at the end [ERROR] Container 'runc' failed and no line with preparing output. |

|

Send message Joined: 13 Feb 15 Posts: 1259 Credit: 1,022,816 RAC: 169 |

2 other jobs without output to the console and consuming almost 2 cores each. Theory_2279-762078-20_1 - [boinc ppbar ue 1800 - - pythia6 6.428 362 100000 20] Theory_2279-796930-20_0 - [boinc pp ttbar 7000 - - pythia6 6.427 ambt1 100000 20] Edit: Digged a bit and come to the conclusion that all tasks from version 4.18 for me and other Windows-users has this strange ending of a task, broken Console-output and higher CPU-usage. Question: Is the science outcome valid? |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 848 Credit: 15,957,878 RAC: 4,190 |

The first 6 tasks I ran as Valid and ran from 90 mins to about 120 mins and ended with that cranky: [ERROR] Container 'runc' failed. And then the next 8 Valids only ran for about 8 minutes each ending with cranky: [ERROR] 'cvmfs_config probe alice.cern.ch' failed. https://lhcathomedev.cern.ch/lhcathome-dev/results.php?userid=192 But now I can't get any new tasks and log says 2/22/2019 1:27:20 PM | lhcathome-dev | This computer has finished a daily quota of 4 tasks That usually only happens when you already got too many Invalids and I only got one Max # jobs No limit |

©2026 CERN