Message boards : CMS Application : CMS jobs becoming available again

Message board moderation

| Author | Message |

|---|---|

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

Hi, I'm back (as my colleague Daniela would say, "from the slough of despair"). You may have noticed some small batches of CMS jobs running at LHC@Home-dev over the last few months as we ironed out several technical and a few more political issues. As it stands, the status is that:

Laurence's VMS, running as T3_CH_CMSAtHome, can merge the result files into much larger (~3 TB) files and write these onto central CMS storage; They can also merge the logs of the MC jobs onto CMS storage, and similarly write the logs of the merge jobs themselves.

|

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 849 Credit: 15,973,123 RAC: 3,955 |

Welcome back Ivan I will run a few myself. |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 849 Credit: 15,973,123 RAC: 3,955 |

It looks like they are still not working here. [ERROR] Condor exited after 1556s without running a job. |

|

Send message Joined: 13 Feb 15 Posts: 1262 Credit: 1,025,626 RAC: 323 |

I returned a first task this morning and got an EXIT_INIT_FAILURE, probably after my nightly shutdown and resume this morning. Before the shutdown the task was suspended and the VM was properly saved. It seems long suspensions are still not appreciated. Before the shutdown yesterday evening, I saw several cmsRun-jobs after each other. These are the jobids I could find that are returned successful by my HostName 38-37-7284 from the batch wmagent_ireid_TC_OneTask_IDR_CMS_Home_190306_163044_2983 e45a0085-d89b-40b5-ae7e-503741ca9aa1-121_0 e45a0085-d89b-40b5-ae7e-503741ca9aa1-163_0 e45a0085-d89b-40b5-ae7e-503741ca9aa1-66_0 BOINC result https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2757800 |

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

It looks like they are still not working here. Hmm, yes, that was because the jobs now run in a singularity container within the VM, at CMS's insistence, and Laurence hadn't configured the wrapper to pick up the output messages. This has now been done, but it appears there is a typo in the script -- at rundown the task complains that there is an unmatched quote character and hangs... We're working on fixing that.  |

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

|

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

It looks like they are still not working here. OK, things look a little unstable now. It might be best to set No New Tasks until we find the problem in the script. :-(  |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 849 Credit: 15,973,123 RAC: 3,955 |

Hmm, yes, that was because the jobs now run in a singularity container within the VM, at CMS's insistence, and Laurence hadn't configured the wrapper to pick up the output messages. This has now been done, but it appears there is a typo in the script -- at rundown the task complains that there is an unmatched quote character and hangs... I just got up and first thing of the day checked my CMS tasks I left running last night and after the first few failed I got 3 Valids! https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2757784 https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2757823 https://lhcathomedev.cern.ch/lhcathome-dev/result.php?resultid=2757820 |

|

Send message Joined: 20 Mar 15 Posts: 243 Credit: 901,716 RAC: 0 |

Welcome back, Ivan. "The good news is that a new grant has been received and I should go back to full-time work" is good news indeed. but the "slough of despond" is putting it a bit strongly. and Laurence hadn't configured the wrapper to pick up the output messages. Perhaps that's why there are no outputs on the "running job", "wrapper" or "error" terminals although cmsRun is shown as using >90% CPU. I accidentally quit "top" but don't know how to restart it so can't see if it's still running. How can "top" (F3) be restarted if accidentally quit? |

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

|

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

I've been out of the loop too long... Whew, finally someone responded. I have a suspicion that there's a holiday around now; certainly when I was working at UZH/PSI I never knew when a holiday was coming up, especially around Lent/Easter/Ascension... So, jobs are available again. Now I need to go read my mail to see who I've p*ssed off!  |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 849 Credit: 15,973,123 RAC: 3,955 |

I have 13 Valids in a row and more running all on Windows OS on Intel and AMD |

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

|

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

I have 13 Valids in a row and more running all on Windows OS on Intel and AMD Just as an idea, beyond the job graphs, in this current batch we have 103 merged result files in the CMS repository, totalling 264 GB. [lxplus019:cath] > ls -l /eos/cms/store/backfill/1/CMSSW_10_4_0/RelValJpsiMuMu_Pt-8/GEN-SIM/JpsiMuMu_Pt_8_forSTEAM_13TeV_TuneCUETP8M1_2018_GenSimFull_TC_OneTask_CMS_Home_IDRv4i-v11/00000/ total 514803101 -rw-r--r--. 1 cmsprd zh 2443985009 Mar 10 13:54 07C7B80E-DCCE-8A4B-8DAA-7C2126016E4C.root -rw-r--r--. 1 cmsprd zh 2390422647 Mar 6 20:13 0F1DDE45-97EC-7E4C-AFF5-777CDD655AB5.root -rw-r--r--. 1 cmsprd zh 2410369896 Mar 10 12:39 115DFE2D-C3C9-864C-8874-868653C0880B.root -rw-r--r--. 1 cmsprd zh 2869529431 Mar 10 01:16 13C14B39-3888-924C-9B66-8A077F29B3D4.root -rw-r--r--. 1 cmsprd zh 1589649593 Mar 9 11:40 162AFFA1-DCB5-8F40-8BC7-B668A69FF711.root -rw-r--r--. 1 cmsprd zh 2627714769 Mar 9 20:17 176922CF-3A5D-9342-BAB3-21F87D84ADDE.root -rw-r--r--. 1 cmsprd zh 2406317039 Mar 10 11:12 1B5449B2-3DC9-E84A-BB93-77A5C294AD87.root -rw-r--r--. 1 cmsprd zh 2781323559 Mar 10 00:31 1BB62E8A-A136-C04B-B2C3-B7254F935CB2.root -rw-r--r--. 1 cmsprd zh 2782841560 Mar 10 10:50 1C1CC6FF-DFF5-0140-8FB2-6DD7FB7D972C.root -rw-r--r--. 1 cmsprd zh 2341317236 Mar 9 23:31 1F571756-4C4F-BB42-82A2-53EE4F62E170.root -rw-r--r--. 1 cmsprd zh 2449295391 Mar 10 06:45 21DCECD1-BF5B-CC4F-B1BF-D176B1830EB0.root -rw-r--r--. 1 cmsprd zh 2482599152 Mar 10 11:56 28B8320F-F33B-724E-99DB-040D6D7C33BA.root -rw-r--r--. 1 cmsprd zh 2344489145 Mar 10 09:03 2E6D775D-0766-D84F-B518-FFDF43582727.root -rw-r--r--. 1 cmsprd zh 2370097528 Mar 6 23:22 2E7EDEDD-A642-BB4A-A24F-72A685ABE659.root -rw-r--r--. 1 cmsprd zh 2413608279 Mar 10 02:40 3C90A7D2-DB65-C44C-98B8-11B9472A7246.root -rw-r--r--. 1 cmsprd zh 2773534708 Mar 9 16:38 3CDD1841-978F-7544-AF42-465C291F70F5.root -rw-r--r--. 1 cmsprd zh 2179655860 Mar 9 15:18 42069ADB-AED0-0F48-8757-2582926FB514.root -rw-r--r--. 1 cmsprd zh 1953758651 Mar 7 03:32 42778B0C-D2F8-7047-8F23-FCC0CE44DE7E.root -rw-r--r--. 1 cmsprd zh 2359352925 Mar 10 14:12 48B5F41C-9062-634E-880A-F7CBB7DE1815.root -rw-r--r--. 1 cmsprd zh 2276298271 Mar 7 02:42 494E85A1-ECA5-374F-8342-65855E5C044B.root -rw-r--r--. 1 cmsprd zh 2393251990 Mar 9 17:28 4C293CF1-CE09-8F4E-A10A-41BFCB94D859.root -rw-r--r--. 1 cmsprd zh 2553870862 Mar 10 00:48 4C348C13-8299-1F4F-A421-5812F099C0EE.root -rw-r--r--. 1 cmsprd zh 2534181859 Mar 10 06:10 4C905104-63A6-6341-BF6A-3BC9BF18A543.root -rw-r--r--. 1 cmsprd zh 2638319658 Mar 7 01:23 4CB054E8-AF89-0D49-8658-471C738B57D0.root -rw-r--r--. 1 cmsprd zh 4255219307 Mar 9 12:18 4CF439BD-326B-2C4F-8301-0FC393CC8EB3.root -rw-r--r--. 1 cmsprd zh 1849903713 Mar 10 05:28 51EBA38B-FBD9-E94A-BD9A-2131309D9DEF.root -rw-r--r--. 1 cmsprd zh 2564484009 Mar 10 13:03 5329788E-441F-0948-B588-87938B3D6A28.root -rw-r--r--. 1 cmsprd zh 2721913710 Mar 10 08:34 549240AD-E57D-314F-86C3-7DBCCF46EBFA.root -rw-r--r--. 1 cmsprd zh 4267661234 Mar 9 12:43 552B3A84-39BC-784E-92A3-38AC76CE9E6E.root -rw-r--r--. 1 cmsprd zh 2581056502 Mar 9 20:57 554476E9-F8D2-5F43-8D56-9D721A7AD048.root -rw-r--r--. 1 cmsprd zh 4262708668 Mar 9 12:05 5D4D1E3B-E7FC-E34D-A765-AD8E416D40BC.root -rw-r--r--. 1 cmsprd zh 2257675282 Mar 7 08:19 601AFA44-FC7A-4747-9959-D65EDB16EFE0.root -rw-r--r--. 1 cmsprd zh 2741679046 Mar 10 09:39 6093C00E-238C-2E4F-9395-86B17308A37F.root -rw-r--r--. 1 cmsprd zh 2638798270 Mar 10 01:57 60E592B1-41E5-1F42-A4A2-DB2B9A6A8B27.root -rw-r--r--. 1 cmsprd zh 2533246225 Mar 10 14:36 6140106D-B6BF-9944-8EDB-8021E16D6C5B.root -rw-r--r--. 1 cmsprd zh 2219852584 Mar 10 05:45 619CB38D-9504-7A45-A4E2-72EF14275FBE.root -rw-r--r--. 1 cmsprd zh 2749693533 Mar 10 07:46 62B3F93D-FAA3-9446-A103-7015F617EA81.root -rw-r--r--. 1 cmsprd zh 4286333926 Mar 9 12:18 63E53D0C-5F81-FF41-B40C-7E955E9E5D65.root -rw-r--r--. 1 cmsprd zh 4250062344 Mar 9 12:33 65DD3888-0C4A-F84A-9D34-43DA5B0DCA0B.root -rw-r--r--. 1 cmsprd zh 2277617321 Mar 7 05:29 6B010649-1480-7E4D-A9B6-FCC4C79B605F.root -rw-r--r--. 1 cmsprd zh 3242243166 Mar 10 08:36 6EE3933F-7956-084E-9677-E7981D82E94C.root -rw-r--r--. 1 cmsprd zh 2703498118 Mar 9 17:57 70C4130D-CE11-F340-8848-11B83851C0A1.root -rw-r--r--. 1 cmsprd zh 2362990310 Mar 9 22:27 73685B9D-0EC0-7640-8AAC-C5A928C2C342.root -rw-r--r--. 1 cmsprd zh 2285926328 Mar 9 20:35 744C32F9-408C-C641-BC77-FC77FF65F0A9.root -rw-r--r--. 1 cmsprd zh 2410995457 Mar 9 18:24 7462304B-BAD3-5042-AD72-32826BEE0F8A.root -rw-r--r--. 1 cmsprd zh 2779635293 Mar 10 09:58 74AE7D06-F851-4E4E-BE3E-4610E4E171EA.root -rw-r--r--. 1 cmsprd zh 2217761223 Mar 10 03:17 7B7B12FC-2008-954B-A9C1-C4658FFF1986.root -rw-r--r--. 1 cmsprd zh 2247580021 Mar 9 21:55 7CC3C082-3A3A-2149-94E4-2B562B078E6D.root -rw-r--r--. 1 cmsprd zh 2392341056 Mar 10 12:18 7E580870-5B94-544E-80E4-43F59A25D352.root -rw-r--r--. 1 cmsprd zh 2156384815 Mar 7 09:13 7F7D0C98-58CE-2B4D-9862-77691022ADFA.root -rw-r--r--. 1 cmsprd zh 2203991341 Mar 7 00:25 88BF3C14-54AD-7E45-8797-4994EFE82986.root -rw-r--r--. 1 cmsprd zh 2339371214 Mar 7 08:51 892523F7-C6FF-494F-8461-B3E3DBC57DE3.root -rw-r--r--. 1 cmsprd zh 2668691615 Mar 10 05:11 89DBAF1A-4FA1-1648-9246-85C11062A495.root -rw-r--r--. 1 cmsprd zh 2400847737 Mar 6 21:14 8AFFE559-2CBF-6345-9C55-7145B66197E2.root -rw-r--r--. 1 cmsprd zh 2700380794 Mar 9 12:25 8EFAB728-DCA8-0A45-98ED-7C9C53B6C048.root -rw-r--r--. 1 cmsprd zh 2382195529 Mar 10 09:21 8F52E0FE-67C6-C545-BF62-C97A6F7FB455.root -rw-r--r--. 1 cmsprd zh 2468068200 Mar 10 05:00 93B3B40B-0718-2040-B34A-A3BFE1AEB25B.root -rw-r--r--. 1 cmsprd zh 4235763689 Mar 9 12:34 946B42CB-88BF-A341-8F24-8623BEFA2A9E.root -rw-r--r--. 1 cmsprd zh 2316014095 Mar 7 04:13 9478DE9A-577D-EE4D-9750-EDB28D841F23.root -rw-r--r--. 1 cmsprd zh 4251833732 Mar 9 12:16 9965A9C8-6602-9E4C-B703-2EDA14314AD7.root -rw-r--r--. 1 cmsprd zh 2347801838 Mar 7 07:19 99A1F378-4FD7-854B-AAB4-301D87DEC470.root -rw-r--r--. 1 cmsprd zh 2538406531 Mar 10 10:17 9B1011DF-21D8-914F-8DBD-1C522570DFEB.root -rw-r--r--. 1 cmsprd zh 2555610393 Mar 9 23:46 9C7509C9-1CCC-DD4B-A94F-C02A4236C12C.root -rw-r--r--. 1 cmsprd zh 2242711080 Mar 7 09:50 A14BA923-A696-2D49-8C01-831EABD2E236.root -rw-r--r--. 1 cmsprd zh 4249262160 Mar 9 12:05 A198F243-5A88-9249-8B55-916B7C656F21.root -rw-r--r--. 1 cmsprd zh 2534349969 Mar 10 01:36 A1C8BEF1-9BC5-6144-8895-EFBE775AF748.root -rw-r--r--. 1 cmsprd zh 2174366896 Mar 9 18:50 AD18FB4F-4F3C-104A-8FAE-33EED43C9268.root -rw-r--r--. 1 cmsprd zh 2216068686 Mar 9 19:23 B1F3D19A-41F3-214A-85D7-952DF48E8C91.root -rw-r--r--. 1 cmsprd zh 2374923333 Mar 10 07:10 B1F90348-9CB5-6D4F-90FD-6581F3C290F8.root -rw-r--r--. 1 cmsprd zh 1014610254 Mar 10 00:37 B283F544-A4A3-4446-9D0E-1A4E66CA82A6.root -rw-r--r--. 1 cmsprd zh 2550650634 Mar 9 22:03 B2BDDC9D-C260-AD45-806E-89BFCBDE5FE3.root -rw-r--r--. 1 cmsprd zh 2405712362 Mar 10 11:38 B98CE73F-BA85-2E4D-9363-F63F602B9570.root -rw-r--r--. 1 cmsprd zh 2294802748 Mar 7 07:44 C2C06CB4-8732-B940-B5D0-F079B43FDE2F.root -rw-r--r--. 1 cmsprd zh 2486507474 Mar 9 21:28 C43671BF-33DB-EB4D-8B94-36A879C601EB.root -rw-r--r--. 1 cmsprd zh 2251492357 Mar 7 02:01 C4F0740B-12F4-1A4A-8BDB-3939ED96A91A.root -rw-r--r--. 1 cmsprd zh 2221237562 Mar 9 23:45 CBB6C76E-5687-5D44-BB03-653B7D4F7EB5.root -rw-r--r--. 1 cmsprd zh 4262186495 Mar 9 12:05 D22B225D-2033-7742-86D2-3CCE223C531A.root -rw-r--r--. 1 cmsprd zh 2725530586 Mar 10 08:50 D2A5CFEA-3650-7448-AF74-C769E76BC16A.root -rw-r--r--. 1 cmsprd zh 2188089289 Mar 10 00:01 D3F54792-38BD-C64C-A06F-7CF060A0710E.root -rw-r--r--. 1 cmsprd zh 2656269564 Mar 10 13:24 D5840C83-E0B1-424F-82AD-04C49E96A978.root -rw-r--r--. 1 cmsprd zh 4264975694 Mar 9 12:15 D588BC02-AC6F-DD45-8961-77457743A91D.root -rw-r--r--. 1 cmsprd zh 2438886328 Mar 10 08:58 D5D50BAA-4438-8A44-8840-AA9EC3BD0E08.root -rw-r--r--. 1 cmsprd zh 2448753174 Mar 9 23:09 D919684D-CAAD-F840-9696-822BEC145076.root -rw-r--r--. 1 cmsprd zh 2427603982 Mar 7 06:06 D9911009-2D50-5B4A-AFE9-28925BA78532.root -rw-r--r--. 1 cmsprd zh 2209927727 Mar 10 02:53 DA5AC700-3A55-3040-B6EF-C50ECD1FD61E.root -rw-r--r--. 1 cmsprd zh 650498763 Mar 10 04:12 DC7D9F9C-9E83-4441-A2CB-AD0915A38B5C.root -rw-r--r--. 1 cmsprd zh 2199264845 Mar 9 23:22 DE4378DB-3985-BF48-96D3-CEC2DC42DB6B.root -rw-r--r--. 1 cmsprd zh 2358829858 Mar 10 03:40 E2229671-10CA-EB4A-8F4B-BCB86CC76681.root -rw-r--r--. 1 cmsprd zh 2350708497 Mar 10 11:35 E329B789-1133-194A-ACAA-E3041B8AD666.root -rw-r--r--. 1 cmsprd zh 2720088548 Mar 7 06:42 E81836F7-B548-FE4E-8013-6F9A7124B6C5.root -rw-r--r--. 1 cmsprd zh 2292291939 Mar 10 04:01 E83C880A-639F-D843-84D6-3BC3811D0F4A.root -rw-r--r--. 1 cmsprd zh 2326510254 Mar 9 19:07 E842FCFE-91FB-BC4B-AF54-6D8C75BB9FC7.root -rw-r--r--. 1 cmsprd zh 2280502206 Mar 7 10:14 EACA83F5-0936-A746-9421-2B608DF74E1E.root -rw-r--r--. 1 cmsprd zh 2262126230 Mar 9 19:50 EC14EB67-1F83-1A47-88DC-99D735BE0050.root -rw-r--r--. 1 cmsprd zh 212933465 Mar 7 02:36 EC95C812-9DA7-874C-8C9E-607BE113D97F.root -rw-r--r--. 1 cmsprd zh 2200736948 Mar 9 16:33 F079A3C9-D793-1A41-8CB7-5816345C33F2.root -rw-r--r--. 1 cmsprd zh 2275607250 Mar 6 22:13 F143CA2A-FA7E-3242-8528-3C0E9D50002D.root -rw-r--r--. 1 cmsprd zh 2213651594 Mar 10 01:40 F30FA601-A485-5C42-9E33-9FA0FE4BAB58.root -rw-r--r--. 1 cmsprd zh 2180941881 Mar 7 04:42 F5FAC10D-29B1-AA4B-92E6-C45C75CBB8B9.root -rw-r--r--. 1 cmsprd zh 2309552965 Mar 9 14:58 F69C665B-5E60-5042-A19C-504D0444F8E1.root -rw-r--r--. 1 cmsprd zh 2366047674 Mar 10 06:25 F6A15375-886A-F242-A8C6-57F218025ECE.root -rw-r--r--. 1 cmsprd zh 2467753836 Mar 10 02:15 F916E4B7-0C33-8345-9137-4F3AC297CADA.root -rw-r--r--. 1 cmsprd zh 4240185883 Mar 9 12:10 FCFA3963-AB4F-6141-92B0-4C64F57733C4.root  |

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 849 Credit: 15,973,123 RAC: 3,955 |

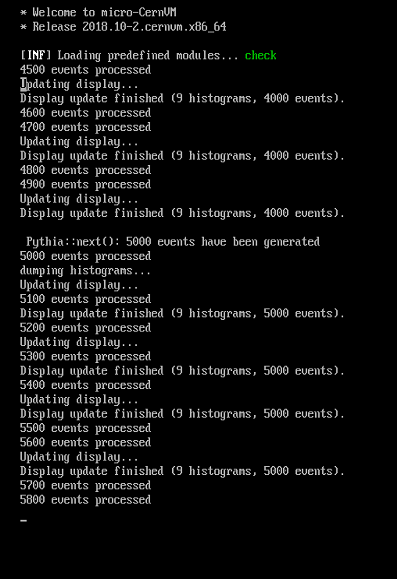

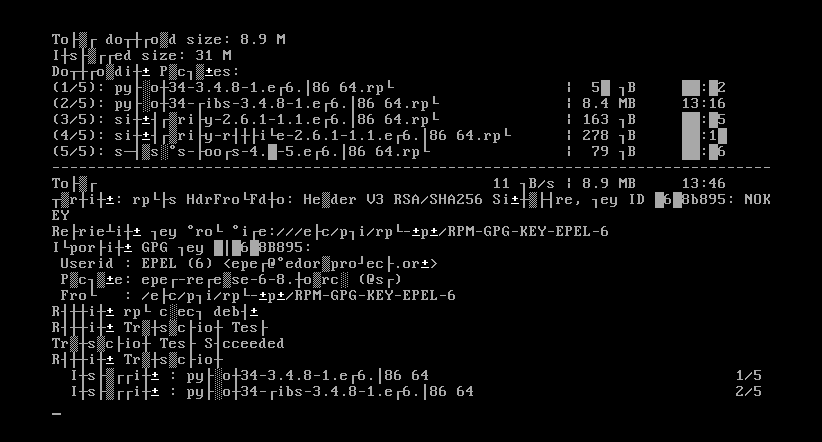

17 Valids in a row now and 3 getting close to finish. I added another host to my CMS fleet Testing a Ryzen CPU running these CMS and a couple GPU's via the core at the same time.........first time I ever ran those GPU tasks without a video card. The VM console was looking different for the first few but now back to what we usually get.   |

|

Send message Joined: 13 Feb 15 Posts: 1262 Credit: 1,025,626 RAC: 323 |

What should be the right memory setting for the VM? The VM is created with 1896 MB According to CMS_2016_03_22.xml it should be 2048 MB StartLog writes: allocating Memory: 3000, Swap: 100.00% 2nd job running and so far 'only' 18736k swap is used. |

|

Send message Joined: 13 Feb 15 Posts: 1262 Credit: 1,025,626 RAC: 323 |

My second job FAILED, but on my site, I don't can explain the reason. GridCertificateSubject /O=Volunteer Computing/O=CERN OverallWallClock 4364 DboardGridEndId A WNIp 193.238.52.47 CreatedTimeStamp 2019-03-10T08:27:56.110614 Source tool DboardStatusEnterTimeStamp 2019-03-11T08:58:11 TargetCE Unknown Memory 0 GridEndStatusTimeStamp 2019-03-11T09:04:15 SchedulerJobId 5a797afc-d932-48f1-9137-2b53c6f73568-19_0 GridStatusTimeStamp 2019-03-11T09:04:15 EventRange 5a797afc-d932-48f1-9137-2b53c6f73568-19 DboardFirstInfoTimeStamp 2019-03-10T15:11:38 ExecutableFinishedTimeStamp 1970-01-01T00:00:00 ShortCEName T3_CH_Volunteer GridEndStatusId FAILED DboardStatusId T NCores 1 JobLog unknown DboardLatestInfoTimeStamp 2019-03-11T09:04:15 StartedRunningTimeStamp 2019-03-11T07:45:27 JobExecExitTimeStamp 2019-03-11T08:58:11 JobMonitorId unknown LongCEName T3_CH_Volunteer CPUTimeCMSSW 0 StageOutTime 0 WallClockStageOut 0 FinishedTimeStamp 2019-03-11T08:58:11 GridName local_unknown WallClockTimeCMSSW 0 WNHostName 38-37-6301 StageOutSE unknown OverallCPU 3465.02 JobExecExitCode 0 ScheduledTimeStamp 1970-01-01T00:00:00 GridStatusReason Job failed at the site SubmittedTimeStamp 2019-03-10T15:11:38 TimeOutFlag 0 JobApplExitCode null JobApplExitReasonId unknown GridFinishedTimeStamp 1970-01-01T00:00:00 TaskMonitorId wmagent_ireid_TC_OneTask_IDR_CMS_Home_190307_210429_7393 efficiency 0.7940009165902842 SchedulerName SIMPLECONDOR GridStatusName FAILED IpValue 193.238.52.47 SiteName T3_CH_Volunteer AppStatusReasonValue unknown VOJobId Unknown GridEndStatusReasonId Job failed at the site PilotFlag 0 RbName unknown DboardJobEndId F NextJobId 0 Between the 2nd and 3rd job, it took a long idle time for getting the 3rd one. |

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

|

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 8 Apr 15 Posts: 849 Credit: 15,973,123 RAC: 3,955 |

Oops, I slept in too long today and the queues drained... More jobs on their way. No problem Ivan........I didn't get up until a bit after noon today (old timers like us can't get out of bed the day after rolling 10 games at the local bowling alley) .......my computers never take a nap. |

ivan ivanSend message Joined: 20 Jan 15 Posts: 1155 Credit: 8,361,722 RAC: 610 |

Oops, I slept in too long today and the queues drained... More jobs on their way. I wish my back could take 10 games of bowling...  |

©2026 CERN